Xentara is a "real" real-time convergence platform that connects sensors to clouds.

The article outlines how real-time semantic middleware platforms like Xentara enable seamless integration of classical control systems with AI and cloud technologies. Unlike traditional architectures that isolate edge and cloud layers, Xentara supports distributed, time-synchronized data processing using a timing model and semantic interoperability. This allows microservices—including machine learning models—to operate in real time across networked edge controllers. The result is a scalable, responsive, and intelligent industrial environment where machines can adapt autonomously, improving efficiency and reducing complexity in industrial IoT systems.

On a distributed platform, classical control technology is seamlessly integrated with AI and cloud.

"Real" real-time may seem like an overdone expression at first. However, the phrase "real-time" is sometimes misunderstood for "fast." In reality, "real" real-time refers to a specified or modelable timeframe in which data and applications are handled simultaneously. Considering this factor results in considerable simplifications and improvements for both machines and production systems when compared to the traditional technique, which involves transferring data from sensors or PLCs to a cloud. In Xentara real-time means cycle times up to 100kHz with a Latency of about 1µs (Intel ATOM).

Surprisingly, the pioneers of Industry 4.0 have already demonstrated astonishing innovations in their reference architecture model.

* 5305_Publication_GMA_Status_Report_ZVEI_Reference_Architecture_Model*

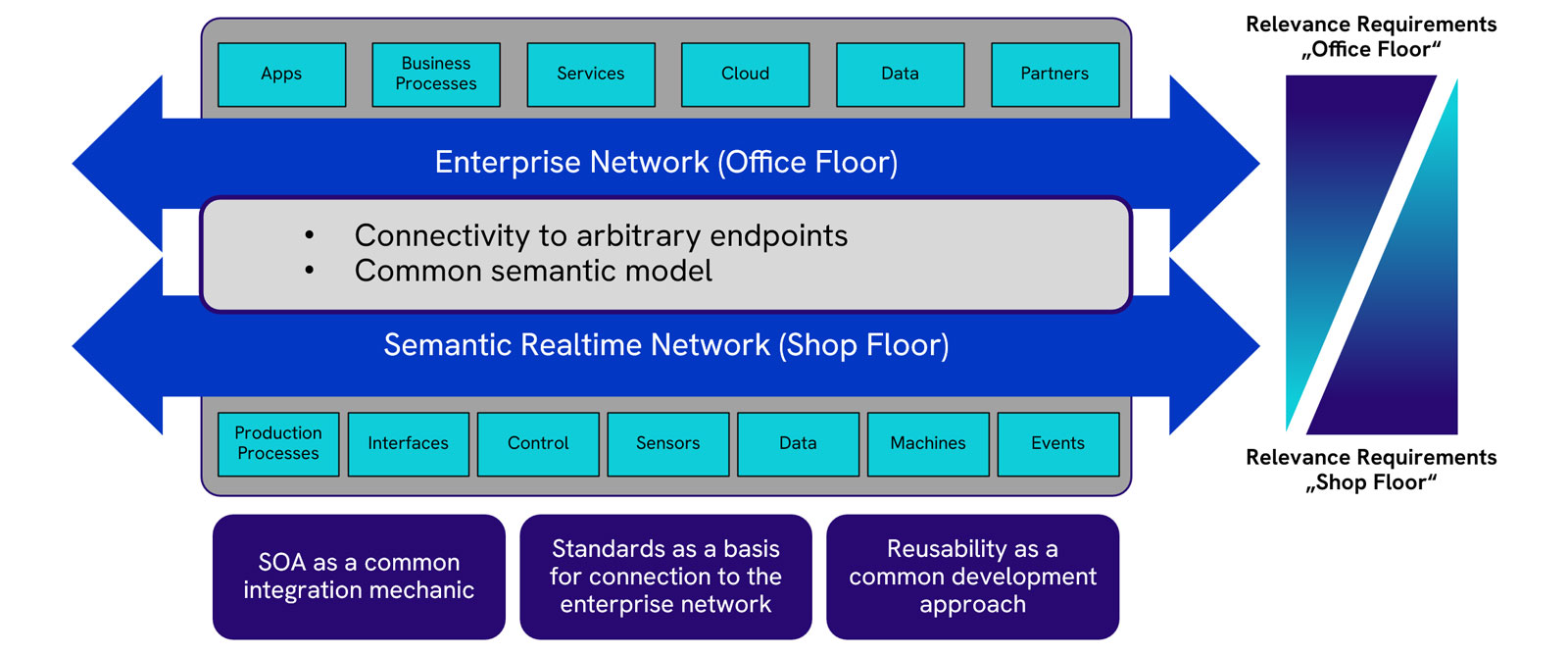

The functions of the so-called enterprise network are increasingly being mapped using cloud architectures that access a real-time network via semantic interoperability, abstracting the underlying assets with their sensors and controllers.

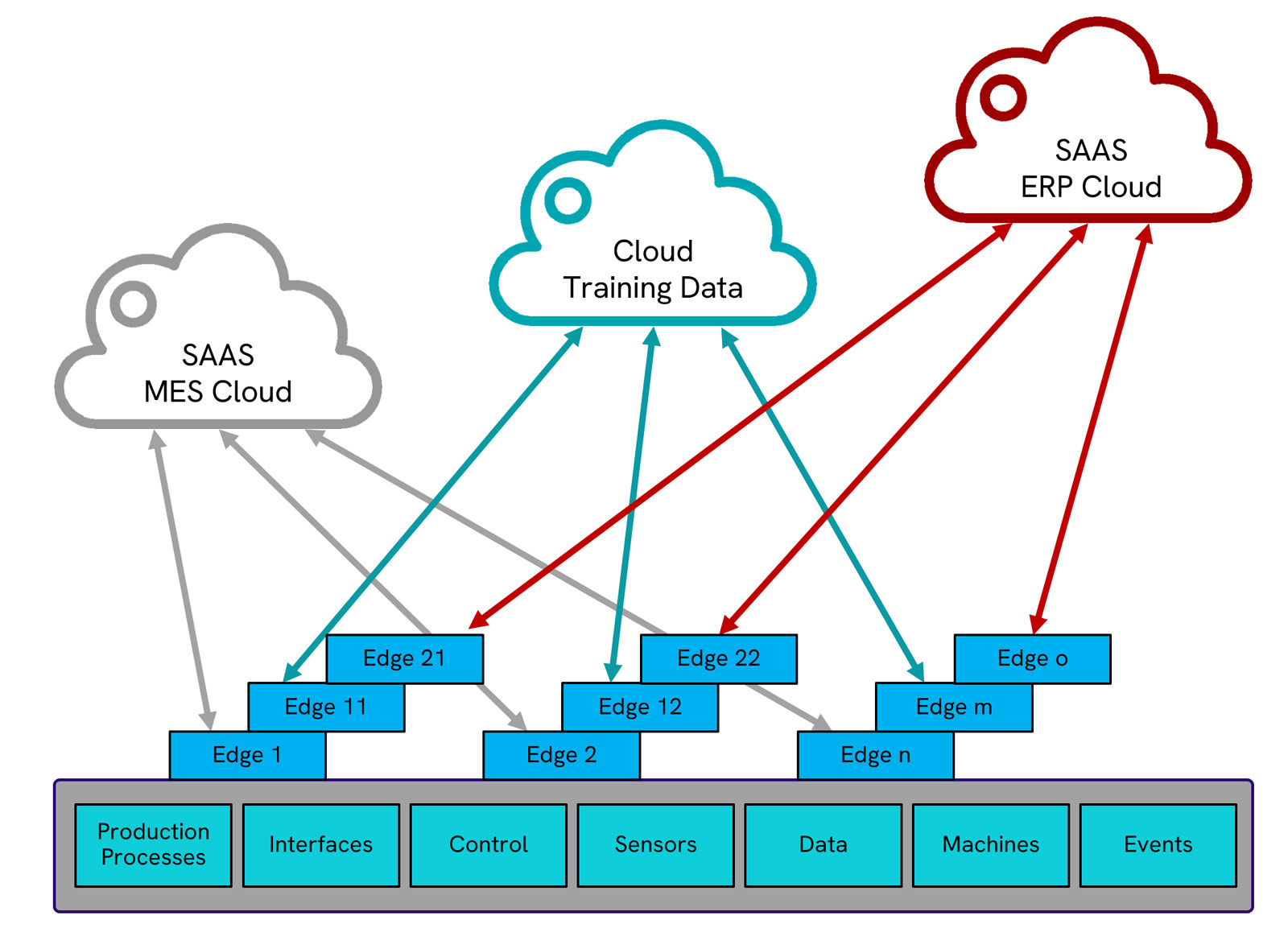

This ambitious strategy, however, has yet to be fully realized. Various edge controllers, which are in some way linked to different cloud technologies, retrieve and forward data directly to the cloud. There are no cross-connections between edge controllers, hence no overarching functions such as machine learning are possible.

This creates conflicting structures and excessive interface needs at the asset level, with little direct benefit. As a result, data moves from the asset level to isolated cloud silos. If any advanced functions exist at or between edges, they are solely devoted. Building the system on an encompassing real-time platform simplifies the situation immediately.

Cloud technologies, with their exclusive edge methodologies, no longer have direct access to assets, but rather rely on a uniform middleware platform that abstracts both the edge and asset layers. From the cloud's perspective, only a digital, object-oriented, semantic representation of the complete asset level is accessible, allowing for seamless communication.

As stated in the reference architectural model, semantic interoperability now allows for data interchange between the cloud and digital objects. At the same time, microservices communicate with assets through specified interfaces and change the semantic objects associated with them. This allows for not only data interchange between cloud and asset, but also inter-asset connectivity through the middleware layer.

It creates a tiered model in which assets function independently of higher-level systems, while universal, uniform communication from middleware to cloud reduces complexity by structuring the entire system.

Edge Controllers Form a Model.

Depending on the requirements, the middleware does not run on a single edge controller. Instead, it employs distributed systems to support a global data model. System limits dissolve, allowing for practically limitless computing power scalability through additional controllers. Microservices also remove system boundaries. Semantic variables are now freely available and can be transferred via Ethernet or 5G as needed.

PTP (Precision Time Protocol) and TSN (Time Sensitive Network) enable an exceptionally optimized multicast network that transports data across systems. While local microservices can cycle up to 100 kHz, network data transfers remain stable at 10 kHz. Given that today's real-time systems operate at around 1 kHz, the middleware greatly exceeds current needs.

The "Real" Real-Time

So yet, "real" real-time has not been addressed. Microservices represent data at the asset level and between digital objects on networked edge controllers.

Time, as well as data, can now be modeled. A timing model determines when data is sent to distributed applications. For example, a status change at an I/O port can cause a system-wide event to activate all connected microservices for a specific response.

Alternatively, high-precision clocks cyclically distribute connected data across the network. In so-called tracks, asset-level data is gathered, transferred, processed by microservices, and returned to the asset level. Checkpoints on a track monitor and coordinate all time-sensitive processes.

This is what constitutes "real" time: The middleware ensures that data and microservices are provisioned and executed in real time, as stated eventually. This occurs in a distributed setting involving processing cores, embedded systems, and fieldbuses.

What about machine learning?

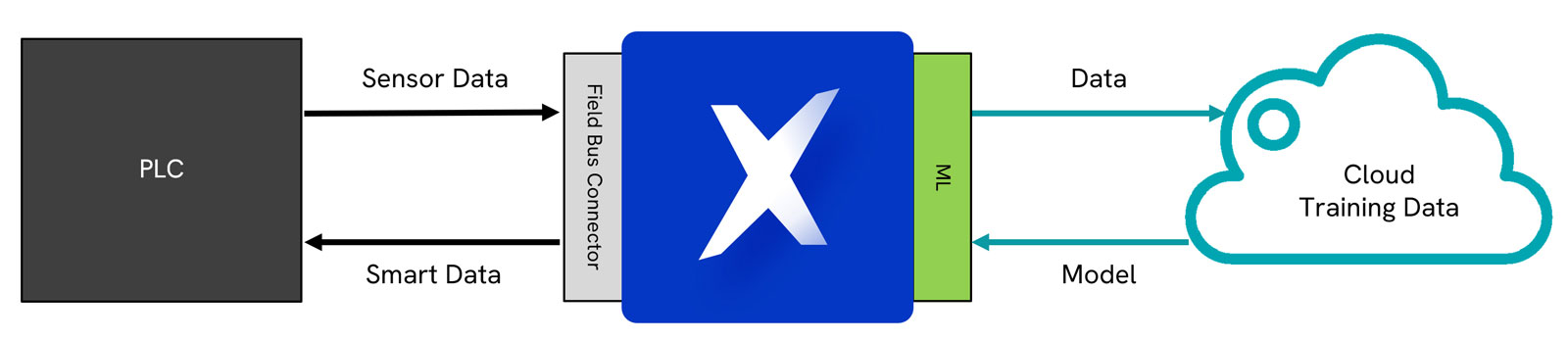

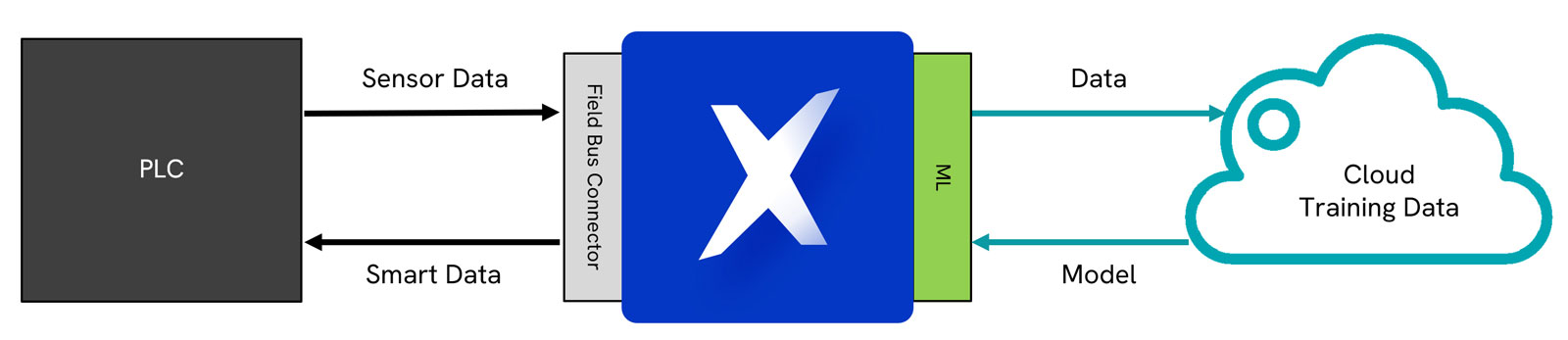

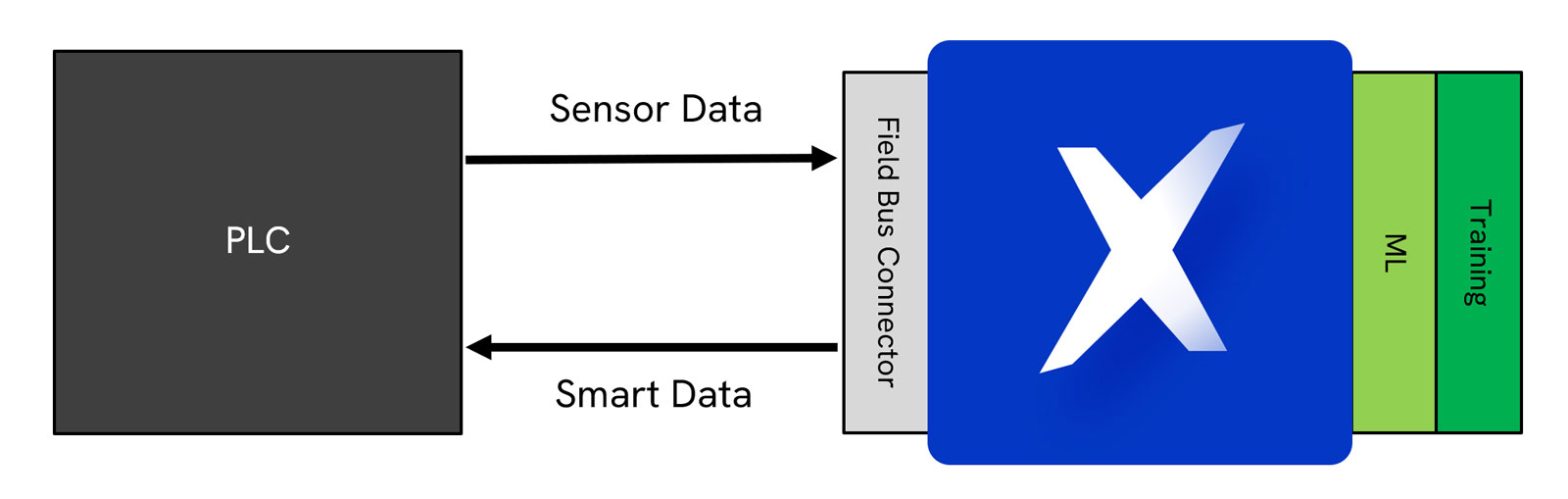

So far, we've discussed microservices that handle data at multiple levels. However, a microservice can also be a machine learning object that uses cloud-trained models. By coordinating ML services with the control systems of the underlying assets, logic gains intelligence.

Because the Xentara platform can transmit all essential data consistently across any timeframe, machine learning models may now be trained directly on the middleware. In many circumstances, this eliminates the need for pricey cloud-based training.

This increases the machine or manufacturing system's independence, flexibility, and adaptability. Resilient machines respond autonomously to changing conditions, allowing them to run more effectively over time. Machines optimize their own operations, thus production processes improve in quality.

Benefits

Currently, industrial control systems rely mainly on classic PLCs. Some are starting to investigate cloud-based business strategies. However, more work is required to truly connect machine learning to control systems, particularly for tasks such as vibration measurement and high-performance data collecting. Semantic real-time middleware facilitates the training of machine learning algorithms at the edge. Machine learning combined with traditional control systems results in clever, robust machines that adapt to changing situations and alert for external intervention when necessary.

Share this article

If you found this article helpful, please share it with your friends!